In timm, essentially we have a total of six different schedulers:

- CosineLRScheduler - SGDR: Stochastic Gradient Descent with Warm Restarts

- TanhLRScheduler - Stochastic Gradient Descent with Hyperbolic-Tangent Decay on Classification

- StepLRScheduler

- PlateauLRScheduler

- MultiStepLRScheduler

- PolyLRScheduler - SGDR scheduler with Polynomial anneling function.

In this tutorial we are going to look at each one of them in detail and also look at how we can train our models using these schedulers using the timm training script or use them as standalone schedulers for custom PyTorch training scripts.

In this section we will look at the various available schedulers in timm.

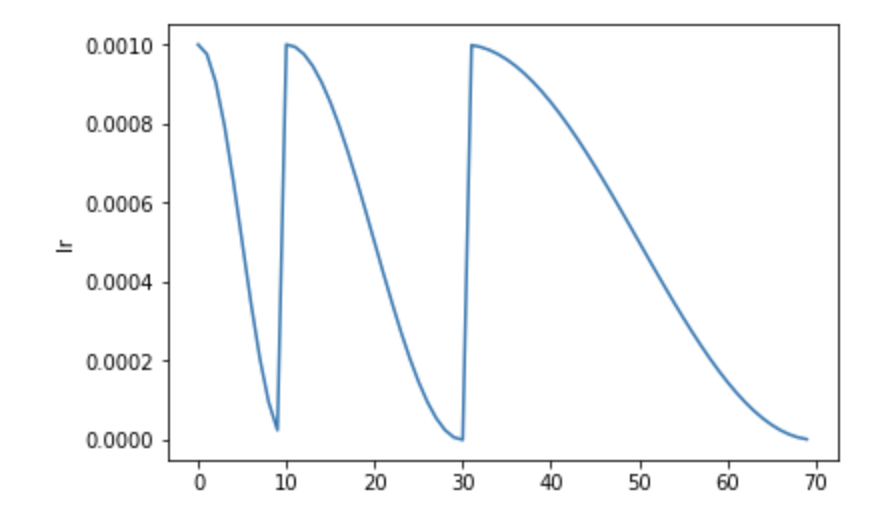

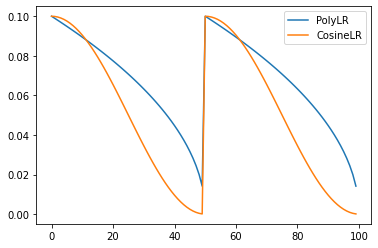

First, let's look at the CosineLRScheduler - SGDR scheduler also referred to as the cosine scheduler in timm.

The SGDR scheduler, or the Stochastic Gradient Descent with Warm Restarts scheduler schedules the learning rate using a cosine schedule but with a tweak. It resets the learning rate to the initial value after some number of epochs.

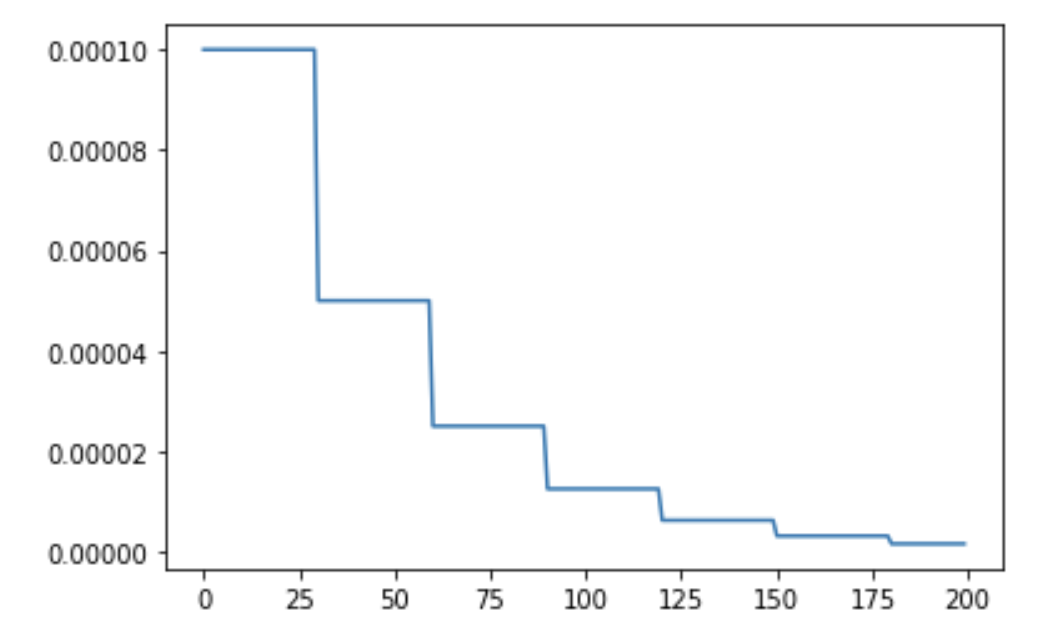

The StepLRScheduler is a basic step LR schedule with warmup, noise.

The schedule for StepLR annealing looks something like:

After a certain number decay_epochs, the learning rate is updated to be lr * decay_rate. In the above StepLR schedule, decay_epochs is set to 30 and decay_rate is set to 0.5 with an initial lr of 1e-4.

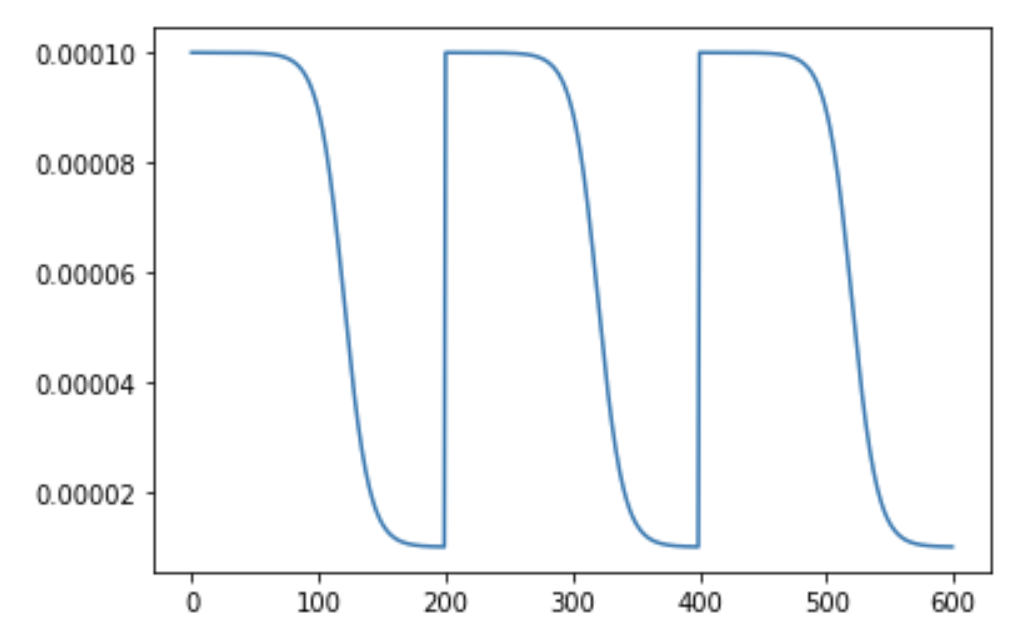

TanhLRScheduler is also referred to as the tanh annealing. tanh stands for hyperbolic tangent decay. The annealing using this scheduler looks something like:

It is similar to the SGDR in the sense that the learning rate is set to the initial lr after a certain number of epochs but the annealing is done using the tanh function.

This scheduler is based on PyTorch's ReduceLROnPlateau scheduler with possible warmup and noise. The basic idea is to track an eval metric and based on the evaluation metric's value, the lr is reduced using StepLR if the eval metric is stagnant for a certain number of epochs.

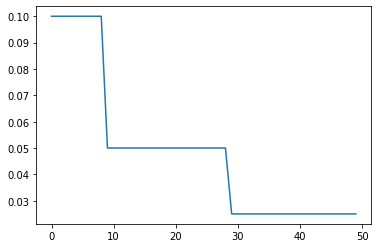

The MultiStepLRScheduler is LR schedule with decay learning rate at given epochs, with warmup, noise.

The schedule for MultiStepLRScheduler annealing (with 'step' at 10, 30) looks something like:

In the above MultiStepLRScheduler schedule, decay_t=[10, 30] and decay_rate=0.5 with an initial lr of 0.1.\

At epoch number 10 and 30, the learning rate is updated to be lr * decay_rate.

PolyLRScheduler is also referred to as the poly annealing.\

poly stands for polynomial decay.\

The annealing using this scheduler looks something like:

It is similar to the cosine and tanhin the sense that the learning rate is set to the initial lr after a certain number of epochs but the annealing is done using the poly function.

It is very easy to train our models using the timm's training script. Essentially, we simply pass in a parameter using the --sched flag to specify which scheduler to use and the various hyperparameters alongside.

- For

SGDR, we pass in--sched cosine. - For

PlatueLRSchedulerwe pass in--sched plateau. - For

TanhLRScheduler, we pass in--sched tanh. - For

StepLR, we pass in--sched step. - For

MultiStepLRScheduler, we pass in--sched multistep. - For

PolyLRScheduler, we pass in--sched poly.

Thus the call to the training script looks something like:

python train.py --sched cosine --epochs 200 --min-lr 1e-5 --lr-cycle-mul 2 --lr-cycle-limit 2